|

Final-year PhD Candidate @ CS Brown University Email / CV / Google Scholar / Github / LinkedIn / Twitter / Blog |

|

I am a final-year PhD candidate in Computer Science at Brown University and a member of Brown Visual Computing Group, advised by Professor James Tompkin. During my PhD, I was fortunate to collaborate with Adam Harley, Mikaela Angelina Uy, and Leonidas Guibas from Stanford University. I received my Master in Computer Science from Columbia University, advised by Professor Shuran Song and Professor Shih-Fu Chang. I completed my Bachelor in Computer Science at Fudan University and was a visiting student at MIT EECS (CSAIL). I was a research intern at NVIDIA Research with Abhishek Badki, Hang Su, and Orazio Gallo, and at Meta Reality Labs with Numair Khan, Lei Xiao, and Douglas Lanman. |

|

|

|

|

|

|

|

|

|

Machines that truly understand our world must grasp how the 3-D world moves, and invites action. My work aims to endow artificial agents with this spatiotemporal intelligence, blending vision, geometry, and high-level reasoning so they can perceive, predict, and plan in real time.

Foundation Models

Multimodal LLMs

Video Generation

Reinforcement Learning

World Models

Machine Learning

|

|

|

|

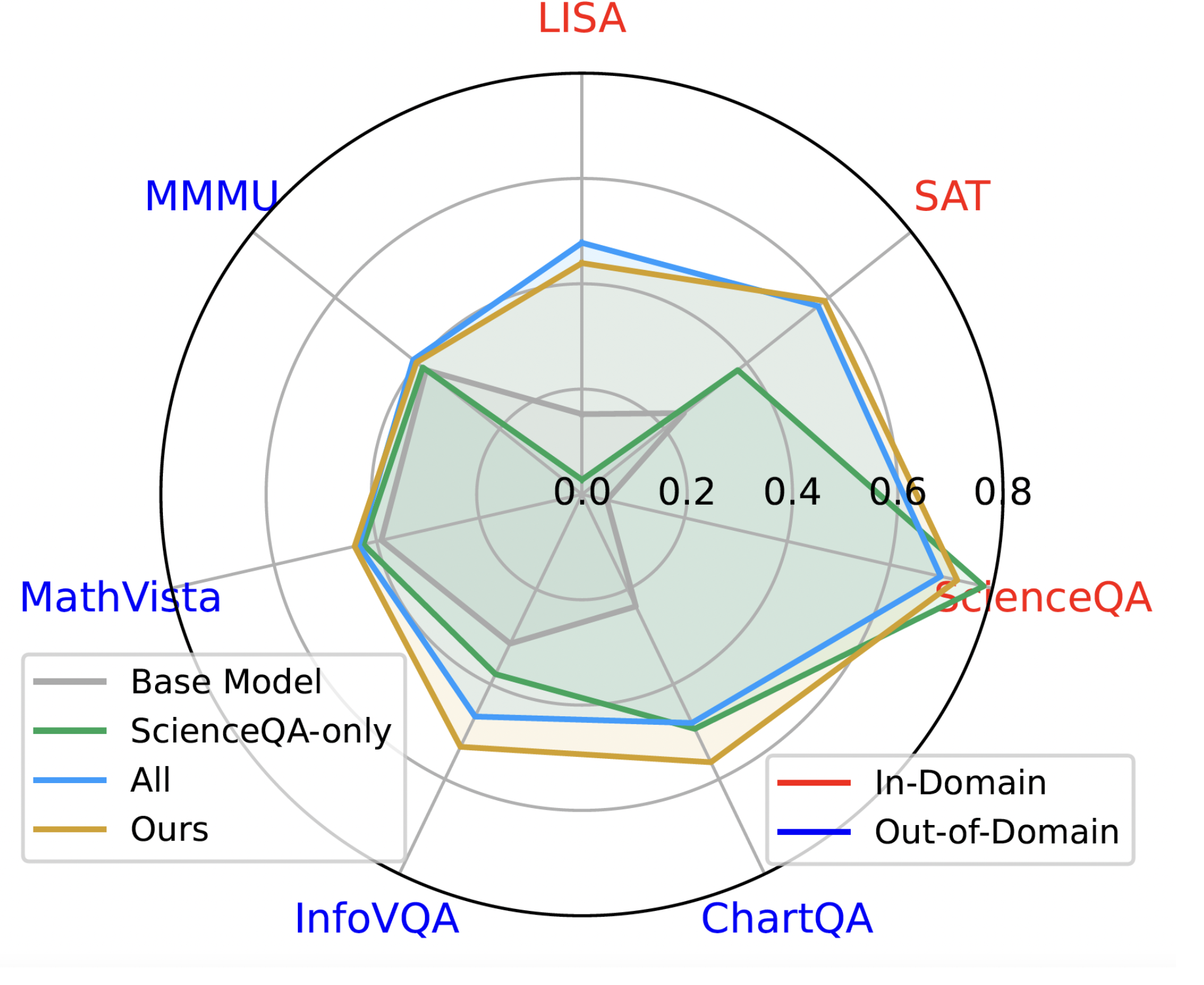

Yiqing Liang, Jielin Qiu, Wenhao Ding, Zuxin Liu, James Tompkin, Mengdi Xu, Mengzhou Xia, Zhengzhong Tu, Laixi Shi, Jiacheng Zhu Under Review, 2025 project / paper / data / code / bibtex We introduce MoDoMoDo, a systematic post-training framework for Multimodal LLM RLVR, featuring a rigorous data mixture problem formulation and benchmark implementation. |

|

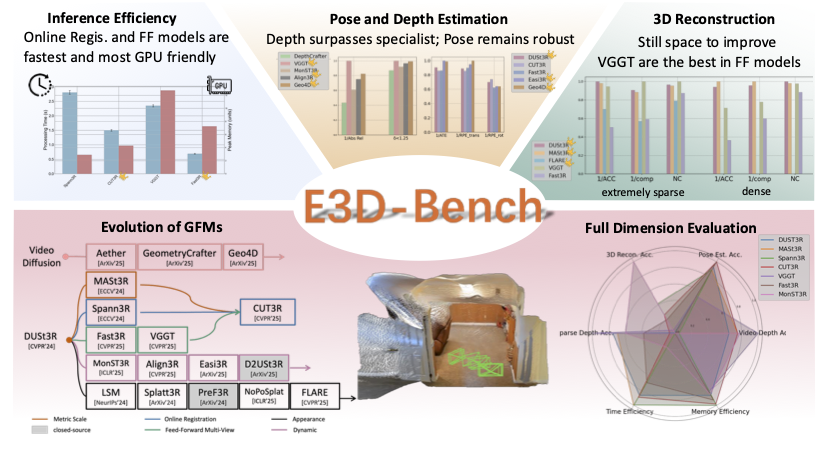

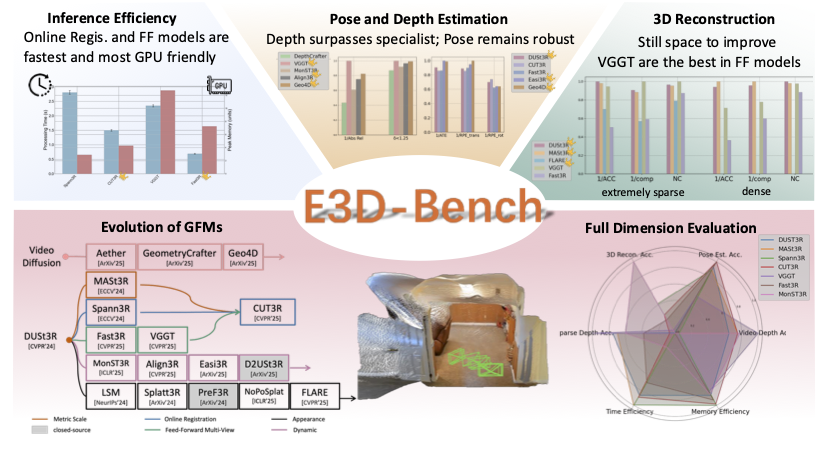

Wenyan Cong, Yiqing Liang, Yancheng Zhang, Ziyi Yang, Yan Wang, Boris Ivanovic, Marco Pavone, Chen Chen, Zhangyang Wang, Zhiwen Fan Under Review, 2025 project / paper / code / bibtex We present the first comprehensive benchmark for 3D end‑to‑end 3D geometric foundation models, covering five core tasks: sparse-view depth estimation, video depth estimation, 3D reconstruction, multi-view pose estimation, novel view synthesis, and spanning both standard and challenging out-of-distribution datasets. |

|

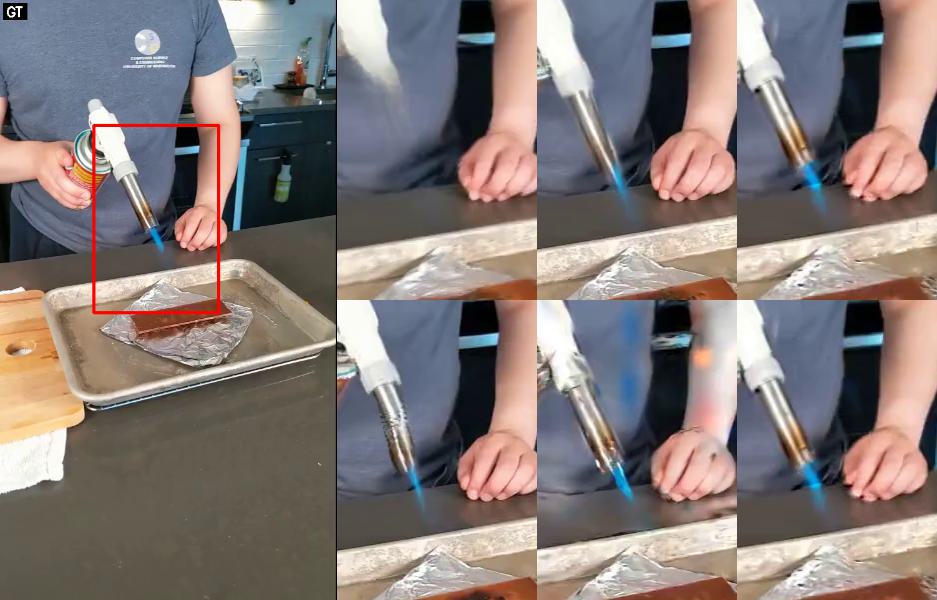

Yiqing Liang, Abhishek Badki*, Hang Su*, James Tompkin, Orazio Gallo CVPR, 2025 Oral, Award Candidate (0.48%) project / paper / video / code / bibtex We present ZeroMSF, the first generalizable 3D foundation model that understands monocular scene flow for diverse real-world scenarios, utilizing our curated data recipe of 1M synthetic training samples. |

|

Yiqing Liang, Mikhail Okunev, Mikaela Angelina Uy, Runfeng Li, Leonidas J. Guibas, James Tompkin, Adam Harley TMLR, 2025 project / paper / data / code / bibtex We present a benchmark of dynamic Gaussian Splatting methods for monocular view synthesis, combining existing datasets and a new synthetic dataset to provide standardized comparisons and identify key factors affecting efficiency and quality. |

|

Yiqing Liang, Numair Khan, Zhengqin Li, Thu Nguyen-Phuoc, Douglas Lanman, James Tompkin, Lei Xiao CVPR, 2024, CV4MR WACV, 2025 project / paper / code / bibtex We propose GauFRe: a dynamic scene reconstruction method using deformable 3D Gaussians for monocular video that is efficient to train, renders in real-time and separates static and dynamic regions. |

|

Yiqing Liang, Eliot Laidlaw, Alexander Meyerowitz, Srinath Sridhar, James Tompkin ICCV, 2023 project / paper / code / bibtex We present SAFF: a dynamic neural volume reconstruction of a casual monocular video that consists of time-varying color, density, scene flow, semantics, and attention information. |

|

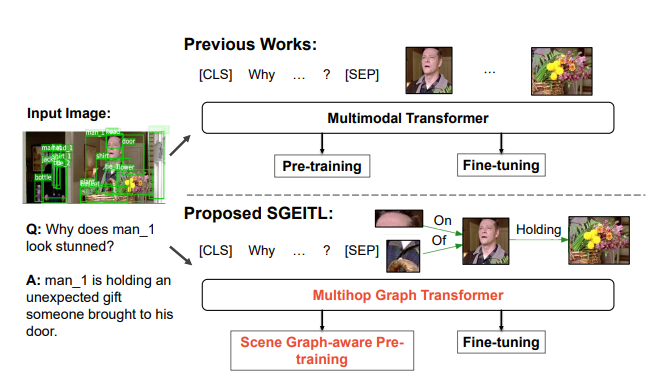

Zhecan Wang*, Haoxuan You*, Liunian Harold Li, Alireza Zareian, Suji Park, Yiqing Liang, Kai-Wei Chang, Shih-Fu Chang AAAI, 2022 paper / bibtex We propose a Scene Graph Enhanced Image-Text Learning (SGEITL) framework to incorporate visual scene graph in commonsense reasoning |

|

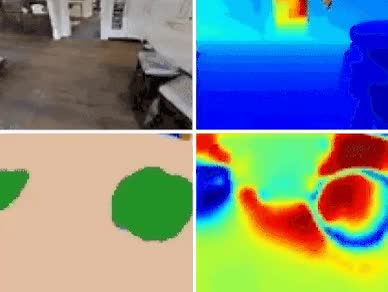

Yiqing Liang, Boyuan Chen, Shuran Song ICRA, 2021 project / paper / video / code / bibtex We explicitly model scene priors using a confidence-aware semantic scene completion module to complete the scene and guide the agent's navigation planning. |

|

Organizer:

Conference Reviewing: NeurIPS / CVPR / ICCV / Eurographics / WACV / IROS / ICRA / AAAI / 3DV Journal Reviewing: TPAMI / RA-L / TVCJ |

|

Based on Jon Barron's template. |